A Talking Vending Machine Made Up of Agents

AI project for the course INN410/TIN170, LP4, VT2007 at Chalmers/Gothenburg University

Persons:

Alberto Realis-Luc, ChalmersAnn Lillieström, Gothenburg UniversityHenning Sato von Rosen, Gothenburg UniversityIlya Kulinich, Gothenburg UniversityLiu Chao, Chalmers

Abstract

Most dialogue systems are complex and tightly tied to the domain

for which they are made. No such complex system is born finished,

so it must be easily extensible, and preferable capable of changing

behavior as a reaction to changed circumstances. We implement a

simple dialogue system as a community of agents. This means that

you can add new information and capabilities to a running system

when needed, also over the Internet.

1 Introduction

1.1 Motivating Example

You are on the tram. You change your plans and need to adjust your travel plan. The information you need exists, but you cannot access it. While it is not feasible to have a full-blown computer with keyboard, mouse and Internet connection on every tram, you can certainly have a ticket vending machine/travel information system with a microphone. Then the most powerful tool you can use for communicating with the information system is your own human language. But computers usually have no built-in support for respecting the way humans behave in a dialogue. While there exist an ever growing number of natural language-enabled information services, few of these give the same level of confidence and efficiency as do a human service-person. Improving these dialogue systems is an interesting research area, and every evolutionary step will bring an increased utility to today's many information system users, be it a computer professional or the casual tram passenger.

1.2 The Human Dialogue Problem

For humans, dialogue is not a problem, it is very very natural. Dialogue has been the driving force of the language evolution. So natural language and human cognition is optimized for it.

However, making a computer behave acceptably in a dialogue with a human is quite another thing. The problems are manifold:

- Language users does not have precise knowledge about how the human dialogue works.

- Programmers are used to represent essential objects like actions and preconditions as code and comments respectively.

This is a classical situation in many programming problems that can be classified as “AI”. The solution lies in “climbing the abstraction ladder”, that is, representing the data at an appropriate level of abstraction, so that the rules that defines the system gets clear, meaningful and readable.

But how to get there? No such complex program as a dialogue system can be born perfect and full-fledged. We think the solution lies in making the system extensible, so that it can stay alive and grow as experience and knowledge is gathered. In our project we use an agent framework in order to have a system of loosely coupled dynamically interchangeable agents.

We say, the solution does not lie in defining the perfect system from the beginning, but in defining an extensible system that can grow and be adapted as new discoveries are made.

1.3 Our Ideas

1.3.1 Use an Agent Architecture

A ticket sales agent with the ability to converse with humans in natural language is confronted with challenges of various nature. Understanding and producing utterances in natural language is perhaps the most obvious difficulty. We need to define what it means for the system to understand an utterance, and what representation this knowledge should have. Remembering previous states of the dialogue is essential for an intelligent system. The system also needs a way of choosing the right action based on the current state.

The main goal of the sales agent is of course to sell a ticket, however, this must be achieved in a tactful manner. We need to choose the right item based on each customer’s personal requirements, and we don’t want to bother them with too many questions. We need to know just enough about them to satisfy their needs.

From the previous requirements it is clear that there are several important parts that together make up the ticket sales agent. We split the system into smaller sub-agents, each having their own area of expertise. These sub-agents cooperate in exploring the possible ways of accomplishing a task.

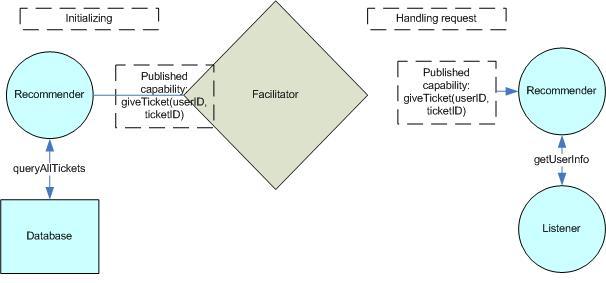

The Open Agent Architecture (OAA) External Link provides a framework for constructing an agent community. One advantage of this framework is its use of a Facilitator agent, a coordinator that delegates tasks to the community. Instead of each agent hard-coding which partners it interacts with, it informs the facilitator of the needs it has and what services it has to offer. When requesting a service, an agent constructs a goal and relays it to a facilitator that coordinates the appropriate service providers in satisfying this goal. When making a request, the agent is not required to specify (or know of) any agents to handle the call. The dependencies of the components is thus greatly reduced, with more flexibility as a result.

All communication between agents is done using a logic-based language called the Interagent Communication Language (ICL), which is based on Prolog. This design makes it possible to implement agents in any programming language for which there is an OAA agent library available. We have chosen to implement the agents in Java and Prolog, allowing everybody to work with the language they feel most comfortable with.

1.3.2 Use a Simple but General Semantic Representation

At the beginning of the project we decided to use a semantic network for representing knowledge. The advantage is that you can accept and store any comprehensible information the user gives1), and then in a specific application you can choose to look only at a part of it. This approach has the following advantages:

- It frees you of the need of hard-wiring the users ability to add data to each specific application.

- It gives the user a justified feeling that the system accepts what the user says if it is sensible. This adds to the user's feeling of predictability and confidence.

- This kind of general data storage can also be used to store much of the programs rules and data, meaning that these rules and data can be made accessible for the user to query. Example2)3).

1.3.3 A Noun-Linked Graph

We used a semantic network called a Noun-Linked Graph that one of the projects members has been working on in another project. The advantage of this kind of semantic network is that it uses convention instead of design. So when you choose where to put the information in the graph, you begin by transforming the thing you want to represent to natural language utterances that uses only nouns, not verbs and adjectives. then you label the arcs using the nouns and puts the data in the nodes. You can also use a full relation as a label of an arc. It is still a noun, only parametrized.

2 How our Solution Works

2.1 Structure of agents community

2.1.1 Overview

For the purposes of our project we designed and implemented the following agents:

- User interface agent

- Listener agent

- Dialogue manager agent

- Recommender agent

- Database

- Output agent

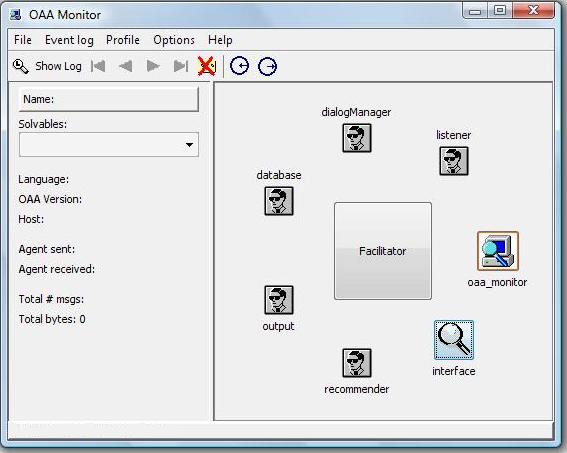

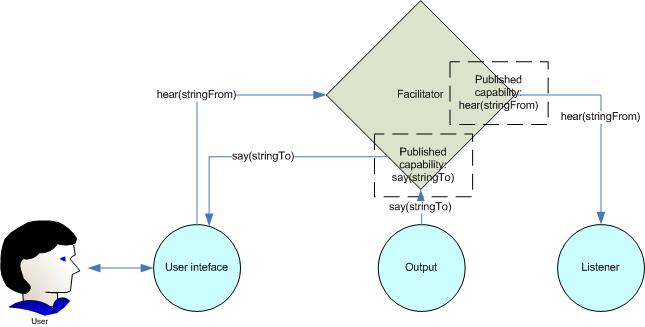

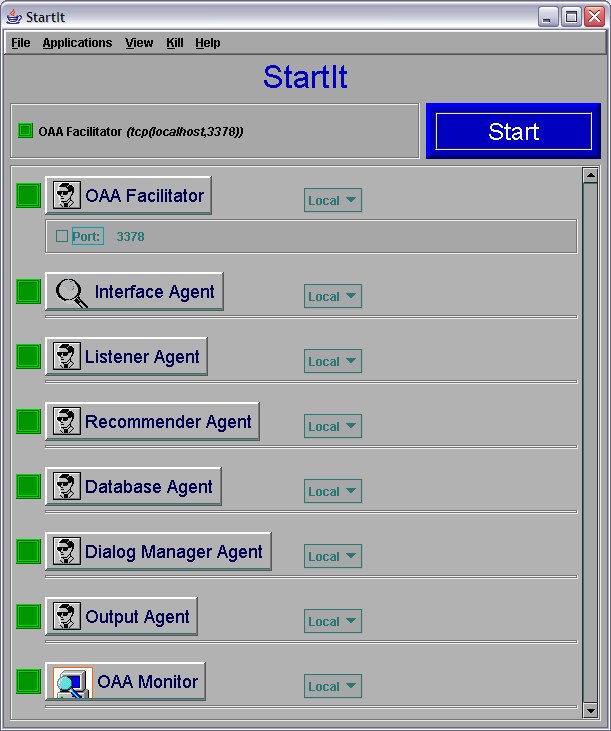

Here is the screen-shot of our OAA system:

All agents communicate with each other using publishing capabilities and mechanisms for sending requests.

2.1.2 Connections between the agents

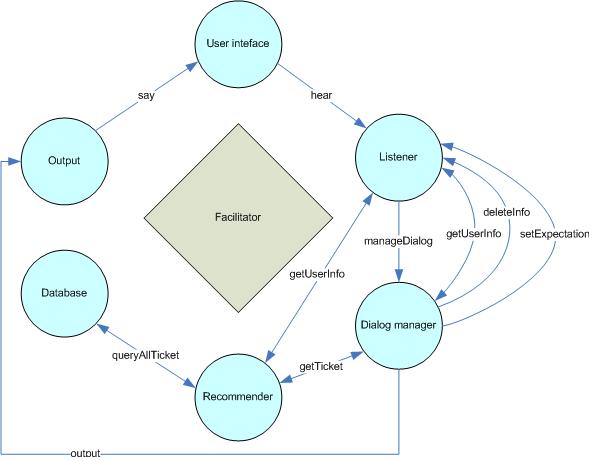

Generally, OAA architecture allows connections between any two agents in the community. By specifying agents capabilities and designing agents request, we choose how agents call each other in our project. Here is the list of all possible connections:

- User interface → Listener , request :hear

- Listener → Dialog manager , request : manageDialog

- Dialog manager → Listener , request : deleteInfo

- Dialog manager → Output , request : output

- Dialog manager → User interface, request : say

- Output → User interface , request : say

- Dialog manager ↔ Listener , request : getUserInfo

- Dialog Manager ↔ Recommender , request : giveTicket

- Recommender ↔ Database , request : queryAllTickets

- Recommender ↔ Listener , request : getUserInfo

Notation: → process continues within new agent, ↔ new agent is requested for some information, and process continues in old agent

2.1.3 How does it work

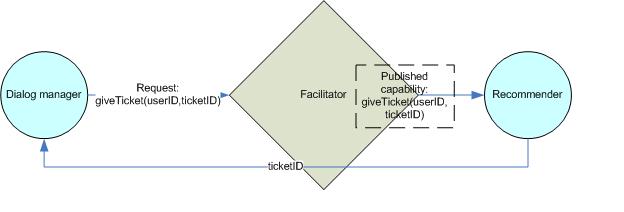

Every agent specifies the form of its request, what information is sent to the other agent, and handles the answer if needed.

E.g. giveTicket(userID, ticketID), which means that

agents community need to find best ticket for special user (with

unique userID) and store it in ticketID

for further usage. Also, agent that publishes capability

giveTicket should support exactly the same format.

2.1.4 Future updates

OAA allows flexible architecture for further developing of the system. Any agent can be replaced easily by a more efficient version without any changes of the other agents. The same way, some of the agents can be merged or splitted. Also some new agents can be added, such as speech recognition or internet synchronizer.

2.2 The User Interface Agent

2.2.1 Overview

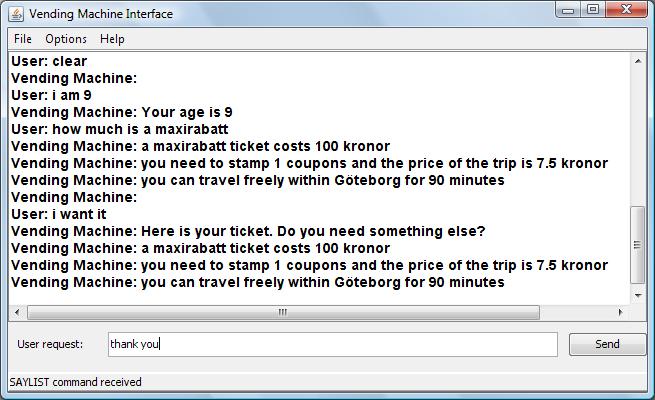

The interface agent is what allows the end user to talk with the vending machine. The customer can use the vending machine viewing only this agent. In fact he hasn't any needs to interact with any other part of the whole system. The interface agent is written in Java and uses the Swing graphic library. It is made as a classic chat style window, in order to make the user feel closer to an actual dialogue. Thus we have:

- A text field where the customer can type his utterances

- A button for sending the request

- A history listing with scrolling facilities4).

2.2.2 Connecting to the OAA agents community

Being an OAA agent, the interface when started, tries to connect

to an OAA facilitator. By default it connects to

localhost at port 3378. It is possible to

choose which location to connect to from the File menu clicking on

OAA connect. A message in the status bar of the window

advertises if the connection succeeded or not. This means that if

we launch the interface on a machine where the facilitator is not

running, then the message Connection FAILED appears in

the status bar. Then we have to start the facilitator and connect

the interface using the command OAA connect from the

file menu. In the file menu we can find other commands, e.g:

Clear chat and Exit. In the options menu

we can choose the window style and whether or not to redirect the

output to the Java console.

2.2.3 How it works

Basically the interface does two jobs:

- Send a request, as a string in natural language, from the user to the system

- Receive an answer from the system, always as a string in natural language, and display it to the user

When the send button is pressed or the return key is typed the

interface, before to send, check if the string in the input text

field is not empty and if it is connected with the facilitator. If

the input string is empty or there is no connection, the user will

be warned in the status bar at the bottom of the window, else the

interface send the ICL command hear(<Message>)

where <Message> is the typed string by the user.

With the return value of the function oaaSolve the interface is

able to know if any other agent has received and processed his hear

command, in fact the agent who receive this command has to set

something (it doesn't matter what) in the OAA answers, this makes

the return value true. This return value is used for knowing if the

listener has received the message and the interface will display in

the status bar if it doesn't get the acknowledge.

The other job of the interface is to display the answers of the

system. After the connection the interface register his OAA

solvable: the ICL command say(<Message>) where

<Message> is a natural language string. In this

way the interface can recognize every say command from the

facilitator and display Message in the chat as answer from the

vending machine. After receiving a say command the interface set

something in the OAA answers this just for acknowledge so the agent

who sent the command can know who his message has been

displayed.

2.2.4 Extra features

Being in java, the interface can also be launched

from an applet in a web page, this is what the

VendingApplet.java program does.

2.2.5 Future updates

The Open Agent Architecture offers a lot advantages: we can imagine a lot of interfaces running on low-profile machines in different places, for example at every bus station, all connected with a only one server with the facilitator and the other agents, for do this we need to assign an ID to each interface and the system has to handle different and simultaneous dialogs by ID, all this could be done as a future update of the project.

2.3 The Listener Agent

2.3.1 Overview

2.3.1.1 The Listeners Role As An Agent

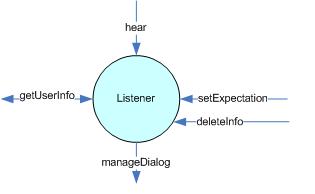

The Listener agent plays an important role in the agent community by interpreting the user's utterances and learning from these. This data is then stored so that other agents can make use of it.

Its most important capability is hear, which takes

a string and, if possible, parses it to a representation of the

system's knowledge of the user. This knowledge is stored together

with the collected knowledge from the previous states. When the

hear command has been called, the Dialogue Manager agent is

notified that the user information has been updated. The capability

getUserInfo allows other agents to read what the

Listener has learnt so far. Other agents can also delete

information from the state by calling the predicate

deleteInfo. This is used by the Dialogue Manager to

remove the information about a user's need after fulfilling it. The

capability setExpectation allows other agents to

extend the grammar as explained below.

The Listener also handles parts of the output generation such as information about tickets.

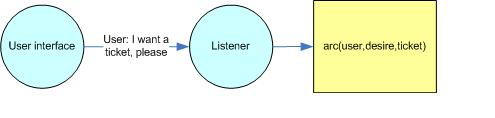

2.3.1.2 Knowledge Representation

Knowledge is represented as a graph of nodes with labelled arcs, where the nodes take on various values and the arc represents a relation between them.

As an example, the system's knowledge of a user's age being 25 is expressed as “arc(user,age,25)”. The Listener also has some ability to draw its own conclusions; for example if a users destination and start stations are known, it can infer the number of zones the user has to travel.

The idea is that the Listener listens and learns, and shares its knowledge with the community. The knowledge base is then analysed by the Dialogue Manager in order to decide on an action. The goal is to collect enough information about the user to be able to recommend and sell a ticket.

2.3.2 Parsing

2.3.2.1 Definite Clause Grammar

The grammar is defined in the Definite Clause Grammar notation of Prolog. DCGs provide a simple and natural way of defining grammar rules. The typical shape of a grammar clause is:

Sentence --> NounPhrase, VerbPhrase, {Semantic actions}.

meaning that a possible form of a sentence is a noun phrase followed by a verb phrase.

The left hand side of the rule consists of a non-terminal symbol, optionally followed by a sequence of terminal symbols. The right hand side may contain any sequence of terminal or non-terminal symbols, separated by conjunction and disjunction operators. Extra conditions may also be included in the body of the clause.

The parser is adapted to its small domain and separates different noun types such as tickets and user types. Some verbs are augmented with a parameter that describes their meaningful context. This approach decreases the flexibility somewhat, but greatly simplifies extracting the meaning from the utterances by disallowing sentences that are grammatically correct but make no sense in the context.

The variety of phrases that one can expect mainly concern the user stating some information about themselves, or querying for information about tickets. Where as the types of phrases are rather limited, there are still an infinite number of ways to express these. The grammar allows the user to express themselves in various ways, defining synonyms and alternative formulations. The “maybe”-rule allows optional words such as please, so that these will not affect the parsing. It is simply defined as:

maybe(Word) --> [Word];[].

2.3.2.2 Assumptions

Since we're assuming that speech recognition is solved, spelling mistakes and punctuation are not dealt with. Pauses in speech are assumed to divide the sentence into subordinate clauses using commas. These are separated from the words with a whitespace. All numbers are represented with digits only. The speech recogniser is assumed to output “I am” and “is not”, etc. and never “I'm” or “isn't”.

2.3.2.3 Dealing with States

When a ticket type has been mentioned, this type is activated to represent the meaning of “it” or “them” etc. This enables the grammar to understand sentences like “I need a maxirabatt”, “How much is it?” but not “I need a ticket for my dog”, “It wants to go to Chalmers”. These kinds of referential ambiguities are not easily dealt with5). We assume that the former kind of sentence is more likely to occur in our application, and thus restrict ourselves to ticket types regarding this matter.

When a user has been asked a question, an expectation needs to

be set in order to understand what their response refers to. If the

Dialogue Manager decides to ask the user about their age, it calls

the capability setExpectation(age) of the Listener

agent. This activates the grammar rule to make it possible to parse

a single number and refer it to its meaning as the user's age. This

can be done in a similar way with any of the possible labels of the

knowledge arcs, where the label defines a property of the user, and

we're asking for the value of this property.

2.3.2.4 Keywords

In the case of a failed parse, the string is searched for

keywords, such as ticket types or station names in order to guess

the user's intentions. These guesses are represented as

“maybe-arcs”, which look the same as the regular arcs, but with the

added word maybe to the label to mark that this

knowledge is uncertain. The user should then be asked to confirm

this information in order to replace the maybe-arc with a regular

arc. When asking the user to confirm a maybe-arc with label L and

value V, the expectation is set to (L,V). A positive

answer will then result in the assertion of the arc

(user,L,V) to the information state. The maybe-arc is

retracted from the information state in either case.

2.3.3 Answering Queries

Price queries of tickets are handled directly by the Listener Agent. Since a price query does not affect the user information state, there is no need to notify the recommender. The Listener does a lookup in the list of ticket information provided by the Database Agent and constructs a reply to send to the Interface, using the “say” command. This is a hack. Ideally, all output should be handled through the Output Agent.

2.3.4 Editing the Knowledge Base

The Listener provides a number of capabilities for editing the knowledge base. The other agents can delete and add information as they wish. If an agent has fulfilled a need of the user, the information about this need is deleted. If the user has been asked a question, it is possible to add the fact that this question has been asked, in order to avoid repeating it twice.

2.3.5 Future Updates

There are many opportunities for further enhancement of the Listener Agent. The expressiveness of a grammar can naturally always be improved upon. The vocabulary can be extended and a greater variety of phrases can be added. Grammar rules can also be augmented to include inflection for verbs, etc.

Negations are currently not dealt with. A way of handling this could be to include negated arcs in the knowledge base.

If an arc is added that is inconsistent with the current state, the information given most recently is considered. Different ways of handling inconsistencies is something that can be further explored. The grammar does not currently deal comparison of adjectives. The user can for example not state “i want a cheaper ticket”. This is obviously something that would be desirable in any vending machine system, and should be included in a future version.

Another possibility is to add an administrator mode, where the user can add new ticket types and update prices using natural language.

The OAA allows the use of multiple Listener Agents in a system. All of the Listeners can then listen actively to the input, and let the one with a successful parse send the message further. This makes it possible to add more languages, which can be used alternately in the same session.

One should note that changes made regarding the knowledge base affect other agents in the community. Updates must always be synchronised.

2.4 Dialog Manager Agent

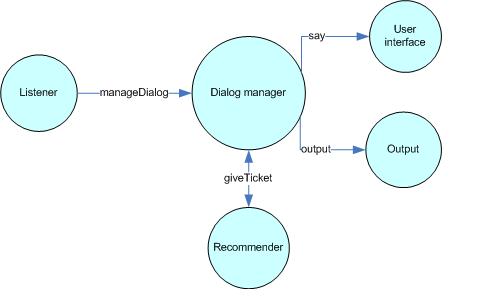

2.4.1 Overview

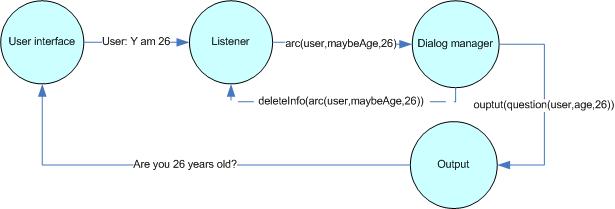

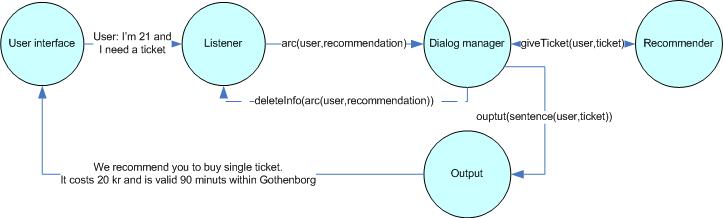

The dialogue manager agent is handling the dialogue with the user. It reads information about the user and then decides upon next action, such as recommendation, question to user, answer to users request and so on. Also it stores information about expectations from the user. E.g. User wants a ticket. System doesn't know user's age. Dialogue manager send a request for output agent to ask user about the age, and remembers about the asked question. When listener parses the next string from the user, it uses the expectations list to produce a correct parsing. So, user answer “23” will be assigned to his age.

2.4.2 Connecting to other agents

Dialogue manager is called from the listener every time, when listener receives a string from the user interface agent. Dialogue manager asks listener for detailed information about the user. If needed, dialogue manager calls recommender and gets information about the best ticket for the particular user. When dialogue manager decided about next action it sends request to output agent to ask user a question or give him some information if it is a complicated sentence or send a string directly to the user interface agent if it is a simple question.

2.4.3 How does it work

When it is called, it updates information about the user, which

is store in listener agent. User information contains action list,

which consists of “maybe” actions and real actions. “Maybe” actions

mean that the listener parsed the sentence but is not fully, so it

assumed that user said his/her age, for example. Then dialogue

manager wants to verify this information sending the question,

which can be answered “yes” or “no”. After this dialogue manager

asks listener to delete the record about this action. Hence, we

suppose that that case is solved. Real actions mean that the system

parsed user's speech and now one of the actions is needed, e.g.

recommendation, ticket and so on. When the decision about next step

in the dialogue is clear, the dialogue manager sends a command to

the output agent, such as output(question(user, age))

or output(question(user,age,26))) for a “yes/no”

question. Due to the lack of time in the project, we produce some

strings for sending to user directly in the dialogue manager. In

this case the string is sent to user interface agent.

Maybe action:

Real action:

(At the moment some of output agent suggested capabilities are handled by listener agent or dialog manager itself)

2.4.4 Future updates

As dialogue manager is strongly connected with the listener agent, they should be merged together. Dialogue manager can accept some new states and actions, such as mood or user preferences about what directions to go and so on. From the other hand, dialogue manager can consider more complicated phrases to say to user, in this case output agent should be capable of handling this requests. After adding all necessary capabilities to the output agent, all messages from the dialogue manager to the user should go through the output agent. That means that the dialog manager will send no messages to user interface agent any more.

2.5 Recommender agent

2.5.1 Overview

The recommender agent chooses the cheapest ticket, which satisfies user preferences. This agent is called from the dialog manager agent and reads information from the database (listener agent). The recommender agent is written in Java.

2.5.2 Connecting to the OAA agents community

When the recommender agent starts it tries to connect to an OAA

facilitator. By default it connects to localhost at

port 3378. When it is connected to the facilitator it

publishes capability giveTicket. Then the recommender

agent reads all information about the available tickets from the

database in listener agent and updates its internal database. So,

if one changes tickets list in the listener agent, the recommender

agent should be restarted. Every time the recommender agent is

requested to give a ticket, it updates actual information from the

database6).

2.5.3 Preconditions

We assume that we know user age and preferred frequency of travel. All other preconditions can be unspecified, but they specify user wishes more precisely when added.

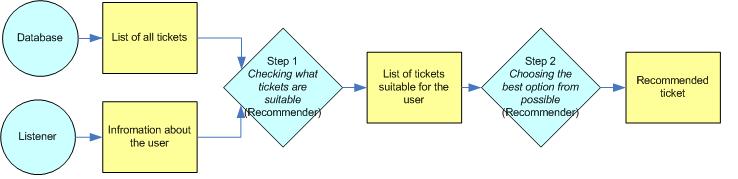

2.5.4 How does it work

Every time when dialogue manager needs to find the best suitable

ticket for the user, it sends a request getTicket to

the agent community. Then facilitator sends this request to the

recommender agent, which published the according capability. The

recommender agent reads all information about the user and updates

its internal user database. Then it makes a list of all tickets

that suits user's preferences. If the list is empty, hence there

are no suitable tickets for the user, the recommender sends back an

empty list as an answer. Otherwise, it chooses the ticket with

lowest price per trip from the suitable tickets list.

System recognize users by their ID, so e.g. user

with a child, can be represented as two different users within the

system. That means that recommender can find the best ticket for

both of them. As there are no group tickets, we don't need to care

about family discount or other discount types.

2.5.5 Future updates

OAA architecture allows to change this agent without changing all other agents. One of the options is to make an additional layer before recommending the ticket, which checks if preconditions are satisfied. Then the agent can return different results according to names of unsatisfied preconditions. Also, group discounts can be implemented, so that recommender can accept list of different user IDs and find the appropriate group ticket. That can be useful for family travels or other types of discounts.

2.6 The Output Agent

2.6.1 Overview

The Output Agent consists of:

- An english grammar

- Canned Text: A mapping from Dialogue Manager messages to raw text

-

A wrapper that publishes this functionality as an

OAA capability,

output/1

Calling scheme:

- The Dialogue Manager calls output(Message)

- The Output Agent translates this into a string

- The Output Agent publishes the string to the user by calling The User Interface Agent

2.6.2 How it Works

When a message arrives, there are two possibilities:

2.6.2.1 Simple Message

If the message is fairly simple, Output maps it directly to text:7)

output(question(user,frequency)):-!, say('How often do you travel?').

2.6.2.2 Complex Message

If the message is complex, e.g. the Dialogue Manager sends the precondition of an action to be asked as a question to the user, then Canned text is not expressive enough, so the grammar is used instead. Ideally this would be done directly, but since the output grammar semantics is not the same8) as that of the rest of the system, a mapping is done first.

Let me say this clearly. Mapping between different semantic representations is not a thing you would want to do when you design your own dialogue system. Interestingly, the reason seems to be that human thinking is highly optimized for what it can handle as meaningful data. Raw semantic representations usually has no or little meaning to a human reader, so the advice is to keep as much as possible of the meaning you want to express in your dialogue system at the natural language level. 9)

2.6.3 Feature Highlights

Note: Since the Output agent was added late in the project, many of it's capabilities are not used, and it's development were stopped, so that not all sensible things that have a semantic representation has a serialization defined by the output agent grammar. Thus, here we give some feature highlights that are interesting in their own right, instead of an in-dpth presentation:

2.6.3.1 Bidirectional Grammar

The grammar works equally well in both directions. This makes

testing very much easier. Testing in Prolog can be daunting, since

if there is an error, usually the only output is just

no, and most debuggers step you through lots of

intermediate steps before hitting the interesting point. A

bidirectional grammar is also much easier to experiment and play

with, since you never actually have to type in raw semantics in

order to serialize something, you just run the grammar

backwards:

rw(M,"I am a cow",[]),rw(M,Cs,[]).

Both ways, same result:

| ?- rw(M,"I am very very very happy",[]), rw(M,_Cs,[]), pcs(_Cs). I am very very very happy M = info(pron(sender)/mood(happiness):std(8))

Both ways, another result:

| ?- rw(M,"the mother of the mother of me",[]), rw(M,_Cs,[]), pcs(_Cs). the mother of my mother M = pfr(pron(sender)/u mother/u mother)

2.6.3.2 Universal Starting Symbol

Any meaningful part10) of an utterance will be recognized/produced by the grammar

The grammar defines one universal starting symbol,

rw11). This means that any part of a well-formed

expression will be accepted by the grammar. This is very helpful in

debugging: If a whole sentence fails to be serialized, it is easy

to check that its parts can be serialized and then identify the

rule where the deviation resides.

In essence, having a universal starting symbol means that you export all the left-hand sides of the production rules out of the grammar. A full sentence:

| ?- rw(M,"how old are you?",[]), rw(M,_Cs,[]), pcs(_Cs). how old are you? M = snt(q(pron(receiver)/u age)) ?

A part of the sentence has meaning too:

| ?- rw(M,"how old",[]), rw(M,_Cs,[]), pcs(_Cs). how old M = ki(adj(age:unknown)) ?

2.6.3.3 Integrated Morphology

Morphological information is encoded as grammar rules

The grammar needs no tokenizer, and can perform mophological syntesis/analysis as a normal grammar rule. Consider this utterance:

| ?- rw(M,"I am 26 years old",[]), rw(M,_Cs,[]), pcs(_Cs). I am twenty six years old M = info(pron(sender)/u age:pl(26,year)) ?

There is no information in the grammar about a word called “years”. Instead there is a rule:

Rules for plural:

rw pl(1,Stem) --> rw num(1)++rw noun(Stem). rw pl(N,Stem) --> rw num(N)++rw pl(unknown,Stem). rw pl(unknown,Stem) --> rw noun(Stem)**"s".

Running it:

| ?- rw(M,"26 years old",[]), rw(M,_Cs,[]), pcs(_Cs). twenty six years old M = adj(u age:pl(26,year))

Exceptions to the rule: Just define it at the plural level:

rw pl(unknown,'SEK') --> "kronor".

Example, the prefix un-:

| ?- rw(M,"unhappy",[]), rw(M,_Cs,[]), pcs(_Cs). sad M = adj(mood(happiness):std(-1))

| ?- rw(M,"happy",[]), rw(M,_Cs,[]), pcs(_Cs). happy M = adj(mood(happiness):std(1))

Depending on the language you are implementing, integrated morphology can more or less important. It's nice to get rid of the tokenizer and it feels natural to use it. We recommend the approach.

2.6.4 Known issues

- The scope of the gammar is very small, since development stopped when it was clear that i was not really goning to be used in the project.

- The grammar does not handle casing.

- Pronouns behave differently from non-pronouns. It would be better to represent them as paths. Now we need double rules at some places. A possible solution might be to use a special last link so that e.g. a verb can recognize pronoun categories and use it to choose the correct form.

2.6.5 Open Issues

- When to disposal of agreement information

- On-line parsing/serialization

- Explicit information in the grammar about direction (parsing or serializing). This will simplify the grammar a lot. In some places the rule is not symmetric and need special cases for parsing and serialising respectively. Now we test if variables are instantiated or not in order to decide the current direction. This is ugly and tricky, so explicit direction information would make the grammar easier to read and define.

- Explicit information about direction would also make it much easier to support robustness, allowing one to add rules freely at the input side, while not applying these rules backwards when serializing. This is very important since some robustness rules loops if run backwards, e.g. allowing arbitrarily many spaces between words.

-

Explicit information about in which language a rule

holds. This would make it easy to

- Extend the grammar to more than one language

- Infer the language of the user

- serialize in just one language but accept mixed input if monolingual parse fails.

3 Summing Up

3.1 Summing up

3.1.1 Summary

Using OAA we constructed community of agents, which is capable of handling dialog with user about the tickets. We used different program languages (Prolog, Java) and technologies (DCG, ICL) in our work, and some of our own invented structures such as the noun linked graph.

Due to the lack of time for the project, we didn't consider many of possible cases in dialog handling. However, the system shows expected behavior and is able of recommending several types of tickets. Also, it is capable of recommending tickets for groups of people, or family with children and dog and so on.

From doing this project we learned a lot about the natural language processing, handling dialogs and open distributed systems. Mainly, we focused on the possibility of keeping our system open for easy future improvements and adding new important features without the necessity of essential changes in existing system components.

3.1.2 Future Work

Extending

As a first step, some agents should be improved to consider more situations in dialogue, so the system can show more natural behaviour. Because of system's distributed nature, some agents should be changed together in order to obtain system behaviour improvements. Also, some capabilities should be moved from one agent to another. E.g. output agent should handle all natural language synthesis and recommender should directly support several tickets (group ticket) request.

Further development can include implementing new agents with different capabilities, for example:

- speech recognition

- 3-D avatar

- showing different moods

- web interface

- telephone service deployment

- …

All these new features would make the system more efficient and more comfortable to use.

Refactoring

There is also another direction of development, with the goal to make the system clearer and more readable by refactoring and discovering appropriate abstractions. Then the possible developments would include:

- Factor out the rules that guide the systems dialogue behaviour, and put it into the database.

- Factor out the parsing/serializing rules and put them into the database.

- Extending the database so that anything meaningful the user asserts can be represented and stored.

- Factor out an intermediate language between natural language and a compiled12) database query, and use it to simplify the parser/serializer and for storing generalized facts about the database.

- Support entering all information the system uses as natural language.

- …

Of course, these two directions are orthogonal and could be followed simultaneously.

One might also consider doing this:

- Fix all remaining bugs

4 Appendix

4.1 Download and installation instructions

4.1.1 Requirements

Our project needs the Open Agent Architecture framework for running its agents. This means that first of all you must install the OAA architecture in order to be able to run the OAA facilitator and our agents. Of course you also need a Java virtual machine for running the Java parts of the project. At the moment the distribution works on Windows XP. On Windows Vista the StartIt program don't work, so the user have to start all agents manually.

4.1.2 Installing the OAA distribution

-

Download the OAA distribution v2.3 from the

OAA download page or using these links:

- Windows distribution

- Linux distribution [If you use this, you need Sicstus version 3]

- Extract the OAA distribution on a computer near you

- From the doc folder of your OAA distribution copy the file setup1.pl to C:\ or to your home directory if you are running Linux.

- Now rename this copied file to setup.pl

setup.pl is the configuration file of the OAA architecture, here you can set where the system can find the facilitator. In our case the whole agents community have to be run on the same machine, so the default configuration with localhost should be OK.13)

4.1.3 Installing our Vending Machine distribution

- Download our Vending Machine from here(Tested on WindowsXP).

- Extract the vendingMachine folder from the archive to the runtime directory in your OAA distribution.

- In the just extracted directory vendingMachine you can use the starter.bat batch file for start the Vending Machine program. 14))

- The StartIt program is used for start and control the whole Vending Machine community agents, hit the big blue button for start them all.

4.1.4 In case of problems

Please refer to the README file of the OAA distribution.

Some hints: OAA agents are programs who use the TCP socket for communicating, so if you have problem of connection within agents and the facilitator try to check your firewall and network settings. If you still have problems, you can try to change localhost to your computer name or your IP address in the setup.pl file or you can try to deactivate your network adapter.

For more information on installing OAA, please refer to the OAA web site.

4.2 User Manual

4.2.1 Starting The Vending Machine with StartIt

After the installation you can use the batch script oaa/runtime/vendingMachine/starter.bat (where oaa is the path where you have installed the oaa framework) for launch the StartIt program. With StartIt is possible to launch easily all agents together.

Just hit the 'Start' blue button for start all agents, and after that you can see the state of each agent by the lights on the left side, if they become all green it means who all the agents are now started correctly and running. Other two windows will appear: one is the user interface and the other is the OAA monitor. Now you can use the Vending Machine typing your query in the interface. Please refer to the user interface description for knowing how to use the interface.

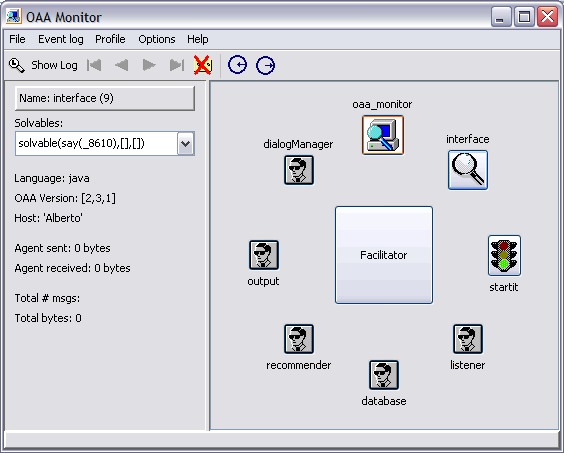

As mentioned before, StartIt is configured to also start the OAA monitor. The OAA monitor is a very useful tool for managing and control the agents.

The monitor is not required by the Vending Machine, so if like, you can close it.

WARNING: when you have finished, remember always to kill all agents and the facilitator before closing StartIt, we recommend to use the 'kill all and exit' command from the 'kill' menu of StartIt. If you forget to kill one or more agents or the facilitator before to close StartIt they remain still running on your computer using memory and consuming CPU cycles In this case you have to terminate them manually.

4.2.2 Querying the Vending Machine

4.2.2.1 Hints for using the system:

We assume that we are parsing the output of speech recognition, hence:

- Spelling mistakes and punctuation are not dealt with

- Pauses in speech are assumed to divide the sentence into subordinate clauses using commas. These are separated from the words with a whitespace.

- All numbers are represented with digits only.

- The speech recogniser is assumed to output “I am” and “is not”, etc. and never “I'm” or “isn't”.

For clearing the information state to initialise a new session, type “clear”.

A sample simple dialogue

User: hello Vending Machine: Hej! How can I help you User: i want a ticket Vending Machine: How often do you travel? User: often Vending Machine: how old are you? User: 9 Vending Machine: We recommended you :youth month ticket, and it costs:385.0kr for 1 tickets ...

A Price Query, and use of it

User: how much is a month ticket Vending Machine: a month ticket costs 515 kronor Vending Machine: you can travel freely within Göteborg for 30 days Vending Machine: User: i want it Vending Machine: Here is your ticket. Do you need something else? ...

Example of failed parse and use of keywords

User: woof woof i need a ticket for my dog woof Vending Machine: are you a dog? User: yes Vending Machine: Do you want a recommendation? User: yes Vending Machine: Say something more about yourself, maybe we can recommend you a better ticket ...

5 Links

- [probdef2007] Problem Definition

-

[infostate2000]Information state

and dialogue management in the TRINDI Dialogue Move Engine

Toolkit [

Staffan Larsson and David Traum (2000)] - [oaa2005] OAA© 2.3.1 Documentation